When President Biden signed his executive order on AI in October 2023, it felt like a watershed moment. The order, titled “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence,” was a direct response to growing concerns about AI—everything from safety risks to privacy violations. Biden’s administration recognized that AI was no longer a distant dream; it was here, affecting industries, influencing decisions, and even raising ethical questions. To address these issues, the order required AI developers to submit safety test results to the government and instructed federal agencies to create standards to mitigate risks. It also emphasized the importance of equity and civil rights, a hallmark of Biden’s broader policy agenda.

For a time, it seemed like the U.S. was carving a clear path toward responsible AI development. This executive order was part of a larger vision to ensure that innovation would not come at the expense of societal well-being. The tech sector, though cautious about additional regulations, largely welcomed the clarity. It was a balance—encouraging progress while guarding against harm.

Fast forward to January 14, 2025, and President Biden’s focus shifted to infrastructure. He issued another executive order, this time aimed at accelerating the construction of AI data centers and clean energy facilities. This move made sense; as AI applications expanded, so did the demand for computational power. Biden’s plan directed the Departments of Defense and Energy to lease federal lands for private companies to build large-scale AI data centers. It was a nod to the importance of staying competitive in AI, especially as nations like China ramped up their own capabilities.

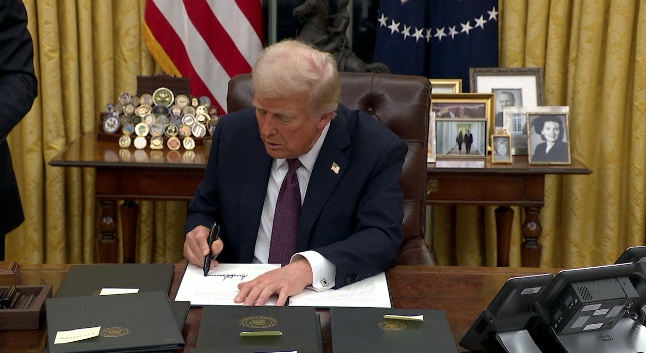

Just days later, on January 20, 2025, President Donald Trump rescinded Biden’s 2023 executive order on AI safety. The revocation was a stark departure from the previous administration’s cautious optimism. Trump’s move signaled a shift toward a less regulated approach to AI, one that prioritized rapid development over potential risks.

The rationale was clear: Trump’s administration saw regulation as a hindrance to innovation. In their view, the U.S. needed to double down on AI development to maintain its competitive edge against global rivals. The focus was less on safety and equity and more on ensuring that American companies could innovate without being bogged down by what they saw as bureaucratic red tape.

I have to admit, this change raises some important questions. Are we prioritizing short-term gains at the expense of long-term safety? Or is this a necessary course correction to ensure that America stays ahead in the AI race? It’s hard to say. What’s clear is that the pendulum of U.S. AI policy swings widely depending on who is in the Oval Office.

Looking ahead, I think we’re entering a new phase in how the U.S. approaches AI. With Trump’s administration in charge, we can expect a heavy emphasis on infrastructure, development, and deregulation. This approach will likely accelerate innovation and attract significant investment. However, it also leaves questions about accountability and ethics. Who ensures that AI doesn’t inadvertently harm individuals or communities? How do we protect consumers in this rapidly evolving landscape?

The contrast between these administrations underscores a broader tension in AI policy: the need to balance innovation with responsibility. Both are critical, and finding that balance is easier said than done. As the technology evolves, so too must our strategies for managing it. Perhaps the answer lies not in swinging between extremes but in finding common ground that combines the best of both approaches.

What’s undeniable is that the future of AI in America is still being written. Each executive order, each policy decision, adds another chapter to the story. And as we move forward, I hope we can learn from the past to chart a course that benefits everyone.